Building data pipelines is a core component of data science at a startup. In order to build data products, you need to be able to collect data points from millions of users and process the results in near real-time. While my previous blog post discussed what type of data to collect and how to send data to an endpoint, this post will discuss how to process data that has been collected, enabling data scientists to work with the data. The coming blog post on model production will discuss how to deploy models on this data platform.

Typically, the destination for a data pipeline is a data lake, such as Hadoop or parquet files on S3, or a relational database, such as Redshift. An ideal data pipeline should have the following properties:

- Low Event Latency: Data scientists should be able to query recent event data in the pipeline, within minutes or seconds of the event being sent to the data collection endpoint. This is useful for testing purposes and for building data products that need to update in near real-time.

- Scalability: A data pipeline should be able to scale to billions of data points, and potentially trillions as a product scales. A high performing system should not only be able to store this data, but make the complete data set available for querying.

- Interactive Querying: A high functioning data pipeline should support both long-running batch queries and smaller interactive queries that enable data scientists to explore tables and understand the schema without having to wait minutes or hours when sampling data.

- Versioning: You should be able to make changes to your data pipeline and event definitions without bringing down the pipeline and suffering data loss. This post will discuss how to build a pipeline that supports different event definitions, in the case of changing an event schema.

- Monitoring: If an event is no longer being received, or tracking data is no longer being received for a particular region, then the data pipeline should generate alerts through tools such as PagerDuty.

- Testing: You should be able to test your data pipeline with test events that do not end up in your data lake or database, but that do test components in the pipeline.

There’s a number of other useful properties that a data pipeline should have, but this is a good starting point for a startup. As you start to build additional components that depend on your data pipeline, you’ll want to set up tooling for fault tolerance and automating tasks.

This post will show how to set up a scalable data pipeline that sends tracking data to a data lake, database, and subscription service for use in data products. I’ll discuss the different types of data in a pipeline, the evolution of data pipelines, and walk through an example pipeline implemented on GCP with PubSub, DataFlow, and BigQuery.

Before deploying a data pipeline, you’ll want to answer the following questions, which resemble our questions about tracking specs:

- Who owns the data pipeline?

- Which teams will be consuming data?

- Who will QA the pipeline?

In a small organization, a data scientist may be responsible for the pipeline, while larger organizations usually have an infrastructure team that is responsible for keeping the pipeline operational. It’s also useful to know which teams will be consuming the data, so that you can stream data to the appropriate teams. For example, marketing may need real-time data of landing page visits to perform attribution for marketing campaigns. And finally, the data quality of events passed to the pipeline should be thoroughly inspected on a regular basis. Sometimes a product update will cause a tracking event to drop relevant data, and a process should be set up to capture these types of changes in data.

Types of Data

Data in a pipeline is often referred to by different names based on the amount of modification that has been performed. Data is typically classified with the following labels:

- Raw Data: Is tracking data with no processing applied. This is data stored in the message encoding format used to send tracking events, such as JSON. Raw data does not yet have a schema applied. It’s common to send all tracking events as raw events, because all events can be sent to a single endpoint and schemas can be applied later on in the pipeline.

- Processed Data: Processed data is raw data that has been decoded into event specific formats, with a schema applied. For example, JSON tracking events that have been translated into a session start events with a fixed schema are considered processed data. Processed events are usually stored in different event tables/destinations in a data pipeline.

- Cooked Data: Processed data that has been aggregated or summarized is referred to as cooked data. For example, processed data could include session start and session end events, and be used as input to cooked data that summarizes daily activity for a user, such as number of sessions and total time on site for a web page.

Data scientists will typically work with processed data, and use tools to create cooked data for other teams. This post will discuss how to build a data pipeline that outputs processed data, while the Business Intelligence post will discuss how to add cooked data to your pipeline.

The Evolution of Data Pipelines

Over the past two decades the landscape for collecting and analyzing data has changed significantly. Rather than storing data locally via log files, modern systems can track activity and apply machine learning in near real-time. Startups might want to use one of the earlier approaches for initial testing, but should really look to more recent approaches for building data pipelines. Based on my experience, I’ve noticed four different approaches to pipelines:

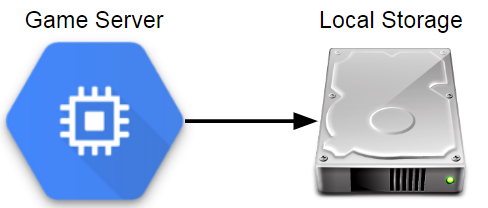

- Flat File Era: Data is saved locally on game servers

- Database Era: Data is staged in flat files and then loaded into a database

- Data Lake Era: Data is stored in Hadoop/S3 and then loaded into a DB

- Serverless Era : Managed services are used for storage and querying

Each of the steps in this evolution support the collection of larger data sets, but may introduce additional operational complexity. For a startup, the goal is to be able to scale data collection without scaling operational resources, and the progression to managed services provides a nice solution for growth.

The data pipeline that we’ll walk through in the next section of this post is based on the most recent era of data pipelines, but it’s useful to walk through different approaches because the requirements for different companies may fit better with different architectures.

Flat File Era

I got started in data science at Electronic Arts in 2010, before EA had an organization built around data. While many game companies were already collecting massive amounts of data about gameplay, most telemetry was stored in the form of log files or other flat file formats that were stored locally on the game servers. Nothing could be queried directly, and calculating basic metrics such as monthly active users (MAU) took substantial effort.

At Electronic Arts, a replay feature was built into Madden NFL 11 which provided an unexpected source of game telemetry. After every game, a game summary in an XML format was sent to a game server that listed each play called, moves taken during the play, and the result of the down. This resulted in millions of files that could be analyzed to learn more about how players interacted with Madden football in the wild.

Storing data locally is by far the easiest approach to take when collecting gameplay data. For example, the PHP approach presented in the last post is useful for setting up a lightweight analytics endpoint. But this approach does have significant drawbacks.

This approach is simple and enables teams to save data in whatever format is needed, but has no fault tolerance, does not store data in a central location, has significant latency in data availability, and has standard tooling for building an ecosystem for analysis. Flat files can work fine if you only have a few servers, but it’s not really an analytics pipeline unless you move the files to a central location. You can write scripts to pull data from log servers to a central location, but it’s not generally a scalable approach.

Database Era

While I was at Sony Online Entertainment, we had game servers save event files to a central file server every couple of minutes. The file server then ran an ETL process about once an hour that fast loaded these event files into our analytics database, which was Vertica at the time. This process had a reasonable latency, about one hour from a game client sending an event to the data being queryable in our analytics database. It also scaled to a large volume of data, but required using a fixed schema for event data.

When I was a Twitch, we used a similar process for one of our analytics databases. The main difference from the approach at SOE was that instead of having game servers scp files to a central location, we used Amazon Kinesis to stream events from servers to a staging area on S3. We then used an ETL process to fast load data into Redshift for analysis. Since then, Twitch has shifted to a data lake approach, in order to scale to a larger volume of data and to provide more options for querying the datasets.

The databases used at SOE and Twitch were immensely valuable for both of the companies, but we did run into challenges as we scaled the amount of data stored. As we collected more detailed information about gameplay, we could no longer keep complete event history in our tables and needed to truncate data older than a few months. This is fine if you can set up summary tables that maintain the most important details about these events, but it’s not an ideal situation.

One of the issues with this approach is that the staging server becomes a central point of failure. It’s also possible for bottlenecks to arise where one game sends way too many events, causing events to be dropped across all of the titles. Another issue is query performance as you scale up the number of analysts working with the database. A team of a few analysts working with a few months of gameplay data may work fine, but after collecting years of data and growing the number of analysts, query performance can be a significant problem, causing some queries to take hours to complete.

The main benefits of this approach are that all event data is available in a single location queryable with SQL and great tooling is available, such as Tableau and DataGrip, for working with relational databases. The drawbacks are that it’s expensive to keep all data loaded into a database like Vertica or Redshift, events needs to have a fixed schema, and truncating tables may be necessary to keep the servers performant.

Another issue with using a database as the main interface for data is that machine learning tools such as Spark’s MLlib cannot be used effectively, since the relevant data needs to be unloaded from the database before it can be operated on. One of the ways of overcoming this limitation is to store gameplay data in a format and storage layer that works well with Big Data tools, such as saving events as Parquet files on S3. This type of configuration became more population in the next era, and gets around the limitations of needing to truncate tables and the reduces the cost of keeping all data.

Data Lake Era

The data storage pattern that was most common while I was working as a data scientist in the games industry was a data lake. The general pattern is to store semi-structured data in a distributed database, and run ETL processes to extract the most relevant data to analytics databases. A number of different tools can be used for the distributed database: at Electronic Arts we used Hadoop, at Microsoft Studios we used Cosmos, and at Twitch we used S3.

This approach enables teams to scale to massive volumes of data, and provides additional fault tolerance. The main downside is that it introduces additional complexity, and can result in analysts having access to less data than if a traditional database approach was used, due to lack of tooling or access policies. Most analysts will interact with data in the same way in this model, using an analytics database populated from data lake ETLs.

One of the benefits of this approach is that it supports a variety of different event schemas, and you can change the attributes of an event without impacting the analytics database. Another advantage is that analytics teams can use tools such as Spark SQL to work with the data lake directly. However, most places I worked at restricted access to the data lake, eliminating many of the benefits of this model.

This approach scales to a massive amount of data, supports flexible event schemas, and provides a good solution for long-running batch queries. The down sides are that it may involve significant operational overhead, may introduce large event latencies, and may lack mature tooling for the end users of the data lake. An additional drawback with this approach is that usually a whole team is needed just to keep the system operational. This makes sense for large organizations, but may be overkill for smaller companies. One of the ways of taking advantage of using a data lake without the cost of operational overhead is by using managed services.

Serverless Era

In the current era, analytics platforms incorporate a number of managed services, which enable teams to work with data in near real-time, scale up systems as necessary, and reduce the overhead of maintaining servers. I never experienced this era while I was working in the game industry, but saw signs of this transition happening. Riot Games is using Spark for ETL processes and machine learning, and needed to spin up infrastructure on demand. Some game teams are using elastic computing methods for game services, and it makes sense to utilize this approach for analytics as well.

This approach has many of the same benefits as using a data lake, autoscales based on query and storage needs, and has minimal operational overhead. The main drawbacks are that managed services can be expensive, and taking this approach will likely result in using platform specific tools that are not portable to other cloud providers.

In my career I had the most success working with the database era approach, since it provided the analytics team with access to all of the relevant data. However, it wasn’t a setup that would continue to scale and most teams that I worked on have since moved to data lake environments. In order for a data lake environment to be successful, analytics teams need access to the underlying data, and mature tooling to support their processes. For a startup, the serverless approach is usually the best way to start building a data pipeline, because it can scale to match demand and requires minimal staff to maintain the data pipeline. The next post will walk through building a sample pipeline with managed services.