Data Analysis to make powerful decisions(source)

AI has taken over a lot of our mundane tasks and made life easier for us in the process. The credit for this goes to the insane amount of research and dedication put in by the researchers, data scientists and developers towards gathering, studying and reshaping the data. Not just the IT sector, but all sorts of industries have benefitted from these advancements. None of it would’ve been possible without the development and improvements in the tools for the job.

When I say “data science”, I am referring to the collection of tools that turn data into real-world actions. These include machine learning, database technologies, statistics, programming, and domain-specific technologies.

With the improvements in the existing tools and entry of newer ones into the Data Science scene, many tasks have become achievable, which were earlier either too intricate or unmanageable. The core idea behind these tools is to unite data analysis, machine learning, statistics and related concepts to make the most out of data. These tools are critical for anyone looking to dive into the world of Data Science and picking the right tools can make a world of difference.

Information is the oil of the 21st century, and analytics is the combustion engine.

— Peter Sondergaard

Open source software for reliable, distributed, scalable computing.

Hadoop — Open source software for reliable, distributed, scalable computing(source)

Apache Hadoop is an open-source software by Apache Software Foundation authorized under the Apache License 2.0. By using parallel processing across clusters of nodes, it facilitates solving complex computational problems and data-intensive tasks. Hadoop does this by splitting large files into chunks and sending it over to nodes with instructions. The components that help Hadoop achieve increased efficiency and smoother processing are:

● Hadoop Common offers standard libraries and functions for the subsystems

● Hadoop Distributed File System provides the filesystem and mechanism for splitting and distributing the chunks

● Hadoop YARN schedules the jobs and manages the clusters

● Hadoop MapReduce for handling the parallel processing

● Speed up disk-powered performance by up to 10 times per project

● Integrate with external apps and software solutions seamlessly

Turn data into insights

Short for Statistical Analysis System, SAS is a statistical tool developed by SAS Institute. It is one of the oldest data analysis tools available. The latest stable build v9.4m6 was released back in November 2018. Key features offered by SAS are:

● Easy to learn with loads of available tutorials

● A well-packed suite of tools

● Simple yet powerful GUI

● Granular analysis of textual content

● Seamless and dedicated technical support

● Visually appealing reports

● Identification of spelling errors and grammar mistakes for a more accurate analysis

The goal is to turn data into information, and information into insight.

- Carly Fiorina

SAS allows you to mine, alter, manage and retrieve data from several areas. Paired with SQL, SAS becomes an extremely efficient tool for data access and analysis. SAS has grown into a suite of tools serving several purposes, some of these areas are:

● Data Mining

● Statistical Analysis

● Business Intelligence Applications

● Clinical Trial Analysis

● Econometrics & Time Series Analysis

Powerful and fastest growing data visualization tool

Tableau is a remarkable data visualization tool that was recently acquired by Salesforce, one of the leading enterprise CRMs in the world. Focussed on providing a clear representation of data in a short period, Tableau can assist in quicker decision-making. It does so by making use of online analytical processing cubes, cloud databases, spreadsheets and relational databases.

The convenient nature of Tableau lets you stay focussed on the statistics instead of worrying about setting it up. Getting started is as easy as dragging and dropping a dataset onto the application while setting up filters and customizing the dataset is a breeze.

- Comprehensive end-to-end analytics

- Advanced data calculations

- Effortless content discoveries

- A fully protected system that reduces security risks to the bare minimum

- A responsive user interface that fits all types of devices and screen dimensions

An interesting thing to know —

When it comes to AI, DL and ML, TensorFlow is a name that you will hear one way or the other. Offered by Google, TensorFlow is a library that does it all, be it building and training models, deploying on diverse platforms such as computers, smartphones and servers, to achieving maximum potential with finite resources.

Using TensorFlow, you can create statistical models, data visualizations and get access to some of the best in class and widely used features for ML and DL. TensorFlow’s inclination towards Python makes it a powerful programming language to operate with numbers and data by storing, filtering and manipulating them for distributed numerical computations.

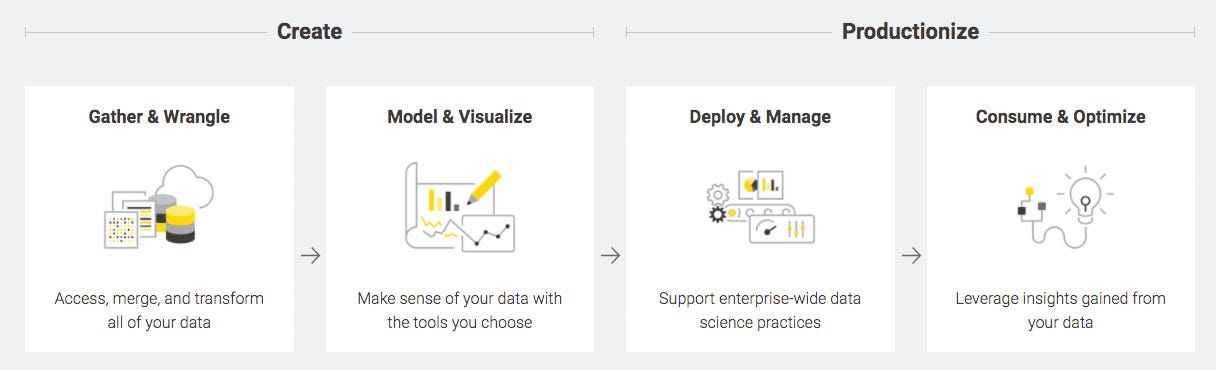

Founded in 2012, DataRobot is now termed as the enterprise-level solution for all your AI needs. It aims to automate the end-to-end process of building, deploying and maintaining your AI. DataRobot can get you started with a few simple clicks and get a lot done without requiring you to be a pro. DataRobot offers the following capabilities for your business needs:

● Automated ML

● Automated Time Series

● MLOps

● Paxata

These can be combined or used individually with other deployment options such as Cloud or On-Premises. For Data Scientists, this enables them to focus more on the problem at hand rather than working on setting things up.

Interesting thing to know -

Start making data-driven decisions today.

BigML was founded with one goal, to make building and sharing datasets and models easier for everyone, ultimately making Machine Learning easier. Aimed to offer powerful ML algorithms, with just one framework for reduced dependencies. BigML’s expertise includes the following areas:

● Classification

● Regression

● Time Series Forecasting

● Cluster Analysis

● Anomaly Detection

● Topic Modelling

BigML includes an easy-to-use GUI that allows for interactive visualization, making decision-making a breeze for Data Scientists. Support for REST APIs can get you up and running in no time. Ability to export models via JSON PML and PMML makes for a seamless transition from one platform to another. Support for on-premises and off-premises deployment is also available.

Free and open-source data analytics, reporting and integration platform

KNIME is a multi-purpose tool that does data reporting and analytics while enabling easy integration of elements such as data mining and machine learning onto your data. KNIME’s intuitive GUI allows for easy extraction, transformation and loading of data with minimal programming knowledge. Enabling the creation of visual data pipelines to create models and interactive views, KNIME can work on large data volumes.

Knime — Free and open-source data analytics, reporting and integration platform(source)

KNIME’s integration capabilities allow extending its core functionality with below-listed database management languages, such as:

● SQLite

● SQL Server

● MySQL

● Oracle

● PostgreSQL

● and more

Apache Spark is a unified analytics engine for large-scale data processing.

Apache Spark by Apache Software Foundation is a tool for analyzing and working on large-scale data. It allows you to program clusters of data for processing them collectively by incorporating data parallelism and fault-tolerance. For data clusters, Spark requires a cluster manager and a distributed storage system. Spark also inherits some of the features from Hadoop, such as YARN, MapR and HDFS.

Spark also offers data cleansing, transformation, model building & evaluation. Spark’s ability to work in-memory makes it extremely fast for processing data and writing to disk. Support for integration with other programming languages, transformations and its open-source nature makes it a good option for Data Scientists.

RapidMiner — Data science platform(source)

RapidMiner is a data science platform for teams that unites data prep, machine learning, and predictive model deployment.

RapidMiner provides tools that can help you prepare your model right from the initial preparation of your data to the last step i.e analyzing the deployed model. Serving as a complete end-to-end Data Science package in itself, RapidMiner leverages the integration provided by its other offerings such as:

● Machine Learning

● Deep Learning

● Text Mining

● Predictive Analysis

Targeted towards Data Scientists and Analysts, some of the features offered by RapidMiner are:

● Data Preparation

● Results Visualization

● Model Validation

● Plugins for expanding core functionality

● ver 1.5 thousand native algorithms

● Real-time data tracking and analytics

● Support of dozens of third-party integrations

● Comprehensive reporting abilities

● Scalability for use by any size team

● Superior security features

Matplotlib makes easy things easy and hard things possible.

Matplotlib is one of the essential open-source graph plotting libraries for Python that any Data Scientist must know. Not only does it provide extensive customization options to the user but it also does so without over-complicating anything. Anyone familiar with Python knows how powerful Python can be with its vast collection of libraries and integration with other programming languages.

With Matplotlib’s simple GUI, Data Scientists can create compelling data visualizations. Several export options make it easier for you to take your custom graph to the platform of your choice.

Get a better picture of your data

Part of Microsoft’s Office suite of tools, Excel for an average joe would be a simple spreadsheet management tool but, in the hands of power users such as Data Scientists, it becomes an extremely efficient tool. Excel is known for being a simple tool for newbies to get into but, once they figure out how powerful it is, they stay.

Excel presents data in such a way that makes decision-making at a glance effortless with its powerful data visualization style. The formulas offered by Excel act as the cherry on top as this transforms Excel from a mere number presenting application into something that can also process those huge numbers, be it concatenation, finding length, finding sum and average and hundreds more.

Data plays a determining role in today’s AI-enabled world, enabling Data Scientists to make impactful decisions. In the absence of capable data science tools, that task becomes painfully intricate. We’ve made it easier for you to get an insight into the tools available, regardless of your level of expertise. If you’re attracted to data science, you have a broad collection of tools to pick. While some of these tools are worthy of being called all-rounders, some cater to specific niches.

Note: To eliminate problems of different kinds, I want to alert you to the fact this article represent just my personal opinion I want to share, and you possess every right to disagree with it.